CISO: Archeologist, Historian or Explorer?

- Phil Venables

- Jul 30, 2021

- 7 min read

Updated: Jul 31, 2021

We talk about attackers being the enemy. Sometimes we talk about insider threats. But one of our biggest enemies is pernicious dependencies. We all have painful examples of these, here’s one:

A long time ago, I came across this situation in an organization from the exasperated people in the middle of it. They wanted to upgrade some of the cryptography in a certain directory product from 56-bit DES to 3DES (told you this was a long time ago). This should have been as easy as changing a simple switch and all is well, at least so the vendor asserted. The problem was the update also changed the way some of the file access protocols worked which in turn impacted which version of NFS was needed to be used to permit interoperability with the enterprise Unix environment. So, ok, go upgrade NFS, right? Now the problem was when switching to a new version of NFS it deprecated, in a non-obvious way, some features of an internal proprietary message bus heavily relied upon. Super, now they plan to make those changes, but to do that caused some changes in some aspects of its performance (I’m glossing over a lot of detail here) which then needed all the applications that relied on that message bus to be recompiled. Yes, in the days when very little was continuously tested and deployed. So, off they went to do all that work. Now, the final problem, one of the apps hadn’t been changed in years, there were no developers with current experience of it, the source code wasn’t in an easily rebuildable state, and so it was determined the best approach was to deprecate it and move the functions to another app which then required some development work. In the end it was about 2 years to get all this done, just to move to 3DES on one directory product. I can imagine, if there was some kind of incident and in the disclosure report it was revealed that this organization was only using 56-bit DES then the global snark meter would have gone to red and everyone would be saying how come this organization didn’t prioritize things, what was the security team doing, didn’t they know better, etc?

Now, there are plenty of easy (or easier) to apply updates that can and should be done and organizations that don’t do that should be forced to reassess their decisions. But there are also plenty of patches, updates, vulnerable configurations to change that appear simple but can be immensely difficult and time-consuming in reality.

Another example, I saw at a few organizations was fast upgrading Struts through a spate of vulnerabilities a few years ago. Most apps were pretty straightforward but some were amazingly difficult because of version specific dependencies that developers had, often, inadvertently slipped into. Some of these dependencies were so difficult it didn’t get done and, it pains me to say, WAFs had to be used for some tactical mitigation during the extended period of upgrading the apps. Again, in the event this was not sufficient to mitigate attacks, the people on the sidelines would claim with glee that those organizations weren’t competent or giving security enough of a priority despite the significant efforts of those security teams.

To be fair though, there is some point there, perhaps they didn’t invest quickly enough in thoroughly modernizing all their applications and getting them free of dependencies, continuously integrated and deployed. But those of you who have been around corporate IT (or any IT) for the past few decades know that it is never as simple as this. This makes it even more of an imperative that we all keep modernizing our application stacks and engineering them in ways to minimize dependencies and tight coupling. Thankfully modern, cloud or cloud-like on premise approaches compel an architecture that drives you to this - or at least makes it a more natural way of operating.

Now, what does this aggregated dependency risk mean for the security team. It means they have to discover and develop an almost irrationally deep understanding of how their organization and organization’s technology works. They have to become some combination of archeologist, cartographer, explorer, librarian, historian and anthropologist.

Archeologist

A former colleague of mine once described technology risk / information security as an “industrial archeology” profession. This has stuck with me. In pretty much every situation there are layers of technology, patterns or practices that stem from epochs of leadership or approaches. You can almost literally dig down into all the enterprise’s systems and find these layers and spot the extinction events, the Cambrian explosions and other moments....…….ah, the “client-server” layer rose from the remains of the “mainframe era”, and those shiny new APIs are just an abstraction layer on top of an old object broker interface which in turn was an abstraction for an LU6.2 mainframe access layer which in turn abstracted an old CICS/IMS transaction. Even more current and, so called, digitally native environments of just a few years of development have these layers. These represent past in-vogue design patterns and the use of that particular eras NoSQL store, cache or transaction processing framework. We're all probably, right now, laying down some sediment that future versions of us will discover and gaze at in wonder or horror.

When you're driving a security program you constantly uncover these layers and artifacts of past decisions that cause delays and unpredictability in your otherwise pristine plans. Doing some archeology upfront is well worth the time.

Cartographer

Another big part of the security program is to construct a map of your domain, whether it’s the enterprise business processes, the extended supply chain, the technology and software inventory, all the way through to people and their roles. Having a precise and constantly updated map is crucial to be able to have the visibility on what you need to prioritize. Without a basic map of your enterprise at some level of abstraction you’ll never be able to construct sufficient roadmaps to plot the course to your desired risk destination. The other useful approach in thinking of yourself as a cartographer is that you can create layers on your basic map to annotate it to reveal specific patterns of risk or other sense-making, a lot like how layers work in Google Earth or in Geographical Information Systems (GIS) technology. On that point, I’ve often been surprised how little of the GIS tech-scape has made it across into technology risk management.

Explorer

In most organizations before you can produce the map you have to discover the environment. This might involve creating the inventories you need to permit your mapping. Many organizations are still surprised to discover whole realms of IT and vendors in their midst. I’ve heard of organizations (thankfully not ones I’ve worked at) where the security team literally was in the process of finding whole departments that for whatever reason had managed to be off the corporate grid for a long time and had, like discovering a long-lost tribe, to be brought into the civilization of the enterprise.

This can get worse, a long time ago, I was bemoaning to a peer in another company how difficult it was to do real time discovery of network end points - he made me feel a lot better by telling me they were still finding whole data centers. You had to laugh, otherwise you’d just cry.

A big part of the exploration is also to discover how the organization works, the processes and wider dependencies that can work against the change you need to instill. This could be everything from the purchase order process which might stall a business unit from adopting the controls you (and they) want, all the way through to discrepancies in job titles and career development ladders which might hamper the workings of your embedded security roles.

Librarian

I’ve yet to find any organization that has done a truly great job at organizing it’s internal information on policies, controls, systems operation, and business processes in any meaningful way. A security program needs this, needs to promulgate this and yet it is usually never given sufficient attention. I’ve known some organizations actually hire library scientists, taxonomists and ontologists to help with this, but in all those cases I’ve seen, the work has eventually died.

It’s important to use frameworks to encode knowledge so other’s can usefully assess their state against these. Google’s SLSA framework for software supply chain security is a great example of this.

Incidentally, hat tip to Richard Westmoreland for his view on what InfoSec is:

Historian

Another critical but often neglected aspect of the security program is its history. This is often undocumented and left to oral history passed down from the long-tenured employees. This could be the key decisions, the incidents or close-calls, customer issues, and the failed projects that drove certain approaches.

These are important to know as often the organization’s history drives a fear of change e.g. ”we tried X once and it nearly killed us, never again”, but the security historian will know that event and will be able to counter: ”yes, but back then we didn’t do Y and Z to make that X work fine and so now we’ll be good.” The security historian often knows or can find out the influencers of influencers of key decision makers. For example, in most organizations there is a shadow unwritten organization chart of who the actual decision makers are, these are often the former trusted lieutenants of organization leaders who are now in other parts of the company but for who the leader still informally reaches out to - to do a “what do you think about this proposal?” gut-check before any significant decision. If those hidden influencers aren’t brought into your process then you might have less control on the outcomes you seek.

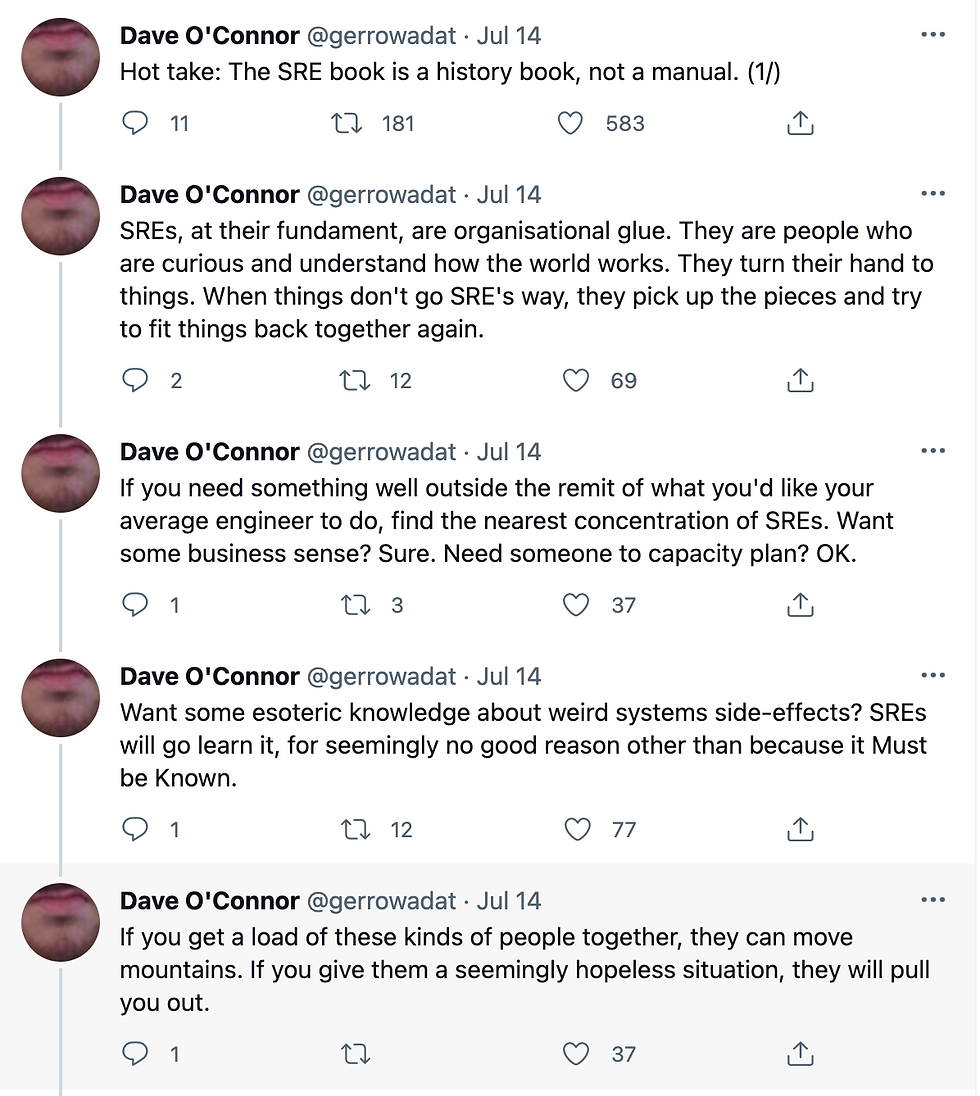

When you’re starting out on your security history exercise it’s always worth talking to the long tenured employees. They are a fountain of knowledge that is crucial for your success. Dave O’Connor also has a great take on the role of SRE for this as well.

Anthropologist

Finally, we have the role of anthropologist. This brings everything together. Our environments are a complex system at multiple levels of abstraction that are constrained by technology archeology, organization history and, mostly, people. This is not just the people in the organization, it’s a much bigger eco-system of customers, suppliers, regulators, auditors and other stakeholders. Each of these communities have their own culture and approaches that needs studying and understanding. Above all, the security anthropologist needs to understand how these "societies" interact so that the right approaches to improved security can be determined.

Bottom line: Pernicious dependencies are the enemy. Discovering these dependencies needs a combination of archeology, cartography, exploration, librarianship and immersing yourself in your organization’s history and social anthropology. Once you know the dependencies you can be best positioned to modernize your application architecture with a mind to reveal, manage and reduce dependencies as one of your most critical security activities. Modern software architectures are your best bet to do this and moving to the cloud (or using cloud-like patterns on premise) in the right way can be a big impetus to do this.

Comments